Top 10 Best Practices for Architecting an LLM App

by Clement Chang

Top 10 Best Practices for Architecting an LLM App

StreamDeploy is a platform for deploying applications to the cloud, and it comprises of a variety of sub-applications to handle the intermediate processes of cloud deployment, such as software containerization, cloud configuration, and CI/CD pipeline yaml creation. The StreamDeploy Dockerfile generator is designed to streamline the containerizing of applications and is free for everyone. It automates the creation of Dockerfiles for GitHub repositories, enabling easy and efficient containerization of applications to ensure reliable, scalable, and cost-effective deployments.

The StreamDeploy team is sharing our learnings from building our LLM-powered deployment platform. In the following article, we outline the architecture of the StreamDeploy Dockerfile generator, focusing on its components, technologies, and the interactions between them.

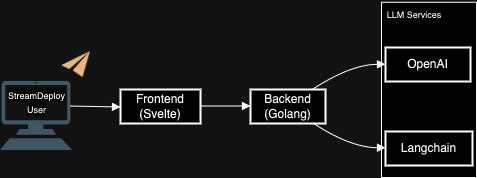

StreamDeploy Architecture Summary

The StreamDeploy architecture is designed to foster scalability, reliability, and user convenience. The core tech stack features Svelte for the frontend, Go for the backend, Langchain + OpenAI for the LLM service, and AWS for deployment and hosting, ensuring seamless operation and an optimal user experience.

Dockerfile Generator Architecture

Frontend: Constructed with Svelte, our frontend delivers a responsive and intuitive interface for users to interact with our tool, facilitating an effortless Dockerfile generation process.

Backend: Crafted in Go, the backend is the powerhouse of our application, capable of handling concurrent requests with high efficiency. It acts as the central hub for processing and orchestrating the interactions between the frontend and our LLM integrations.

LLM Integration: Our platform uniquely integrates with both OpenAI’s API and Langchain, offering a versatile suite of advanced AI features. This dual-integration approach allows us to leverage the strengths of each LLM provider, enhancing the tool’s capability to generate precise and optimized Dockerfiles.

Deployment: Our infrastructure is hosted on AWS, utilizing a suite of services including ECS (Elastic Container Service) for container management, RDS (Relational Database Service) for database needs, and S3 (Simple Storage Service) for storage solutions. This setup guarantees a scalable, secure, and resilient hosting environment for our application.

Component Diagram

Data Flow

1. User Interaction: Users authenticate via the Svelte frontend and submit GitHub repository URLs for Dockerfile generation.

2. API Request: The frontend sends the request to the backend via AWS API Gateway.

3. LLM Processing: The backend communicates with the OpenAI API, sending repository data and receiving Dockerfile generation instructions.

4. Dockerfile Generation: The backend processes the LLM’s instructions, generates the Dockerfile, and stores it in AWS S3.

5. Data Storage: User and request data are stored in AWS RDS for record-keeping and analytics.

6. Response to User: The generated Dockerfile is returned to the user through the frontend interface.

Scalability and Reliability

Load Balancing: AWS Elastic Load Balancing distributes incoming application traffic across multiple targets, improving the scalability and reliability of the application.

Auto Scaling: AWS ECS and EC2 Auto Scaling adjust capacity to maintain steady, predictable performance at the lowest possible cost.

Database Replication: AWS RDS Multi-AZ deployments provide enhanced availability and durability for Database Instances, making them a natural fit for production database workloads.

Security

IAM Roles: AWS Identity and Access Management (IAM) roles ensure that each application component has access only to the resources necessary for its operation.

Data Encryption: AWS services like RDS and S3 provide encryption at rest, ensuring that all data is securely stored.

Network Security: Security groups and network ACLs in AWS VPC provide a robust security layer at the network level.

10 Best Practices for Architecting LLM Applications

As we learned when building StreamDeploy’s AI-powered Dockerfile generator, it is crucial to underscore the importance of best practices tailored specifically for developing and deploying LLM applications. These practices are instrumental in ensuring that LLM applications not only meet the expected functional requirements but also adhere to the highest standards of safety, security, robustness, and performance. Here are our top 10 Best Practices for LLM App Architecture:

1. Implement Layered Security Measures

Given the sensitive nature of data processed by LLM applications, adopt a multi-layered security approach. This includes network segmentation, firewalls, intrusion detection systems, and regular security audits to protect against potential breaches.

For network segmentation, consider dividing your network into subnetworks to isolate the LLM application from other systems. Use firewalls to control traffic between these segments. For intrusion detection, tools like Snort or Suricata can monitor network traffic for suspicious activity. Regular security audits can be automated with tools like Nessus or OpenVAS.

# Example of using iptables for basic firewall setup

iptables -A INPUT -p tcp --dport 80 -j ACCEPT

iptables -A INPUT -p tcp -j DROP2. Utilize Secure APIs for LLM Integrations

When calling OpenAI’s API, ensure communication is over HTTPS. Implement rate limiting using a tool like nginx or a cloud service feature. For access control, manage API keys securely, using environment variables or secrets management services like AWS Secrets Manager.

# Example of securely calling OpenAI's API with Python requests

import requests

import os

api_key = os.getenv('OPENAI_API_KEY')

headers = {"Authorization": f"Bearer {api_key}"}

response = requests.post(

"https://api.openai.com/v1/engines/davinci/completions",

headers=headers,

json={"prompt": "Translate the following English text to French:", "max_tokens": 60},

timeout=5, # Timeout for the request

)3. Regularly Update LLM Models

Keep your LLM models up to date to benefit from the latest improvements in accuracy and efficiency. Regular updates can also patch vulnerabilities that might compromise the application’s integrity.

\ Automate the process of checking for model updates using CI/CD pipelines. Incorporate scripts in your deployment process to fetch the latest model versions and run validation tests before rolling them out to production.

# Example Bash script snippet for updating an LLM model

echo "Checking for model updates..."

# Placeholder for model update check logic

echo "Model update available. Updating..."

# Placeholder for update logic

echo "Running validation tests..."

# Placeholder for test execution logic

echo "Update and validation complete."4. Optimize Data Handling and Storage

Data is the lifeblood of LLM applications. Implement best practices for data handling and storage, including encryption at rest and in transit, minimal data retention policies, and secure access protocols.

Encrypt data at rest using AWS RDS encryption options and ensure data in transit is encrypted using TLS. Implement policies for minimal data retention directly in your database management system or application logic.

-- Example SQL command to enable encryption for a new RDS instance

CREATE DATABASE myDatabase ENCRYPTION 'AES-256'5. Incorporate Automated Testing and Validation

Develop comprehensive test suites to cover various aspects of the application, including performance, security, and functional correctness. Use automated testing tools to regularly scan for vulnerabilities and ensure the application behaves as expected under different conditions.

Use a CI/CD tool like Jenkins or GitHub Actions to automate the running of test suites. Include static code analysis, security vulnerability scanning, and performance benchmarking in your testing pipeline.

# Example GitHub Actions workflow for running tests

name: LLM Application Tests

on: [push, pull_request]

jobs:

build-and-test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Run tests

run: make test6. Adopt Containerization Best Practices

Leverage the containerization strategy to enhance the deployment process. Optimize Dockerfiles by using multi-stage builds to reduce image size and remove unnecessary tools or services that could introduce vulnerabilities. Scan images for vulnerabilities using tools like Docker Bench for Security or Trivy.

# Example of a multi-stage Dockerfile

FROM python:3.8-slim AS builder

COPY . /app

WORKDIR /app

RUN pip install -r requirements.txt

FROM python:3.8-slim AS runtime

COPY --from=builder /app /app

WORKDIR /app

CMD ["python", "app.py"]7. Design for Failover and Redundancy

Build redundancy into your LLM application architecture to ensure high availability. Use load balancers and replicate critical components across different zones or regions to mitigate the impact of partial system failures. \ \ Utilize AWS Auto Scaling Groups and Elastic Load Balancing to distribute traffic across instances in multiple availability zones. This ensures your application remains available even if one zone goes down.

# Example Terraform configuration for an Auto Scaling Group with multi-AZ

resource "aws_autoscaling_group" "example" {

availability_zones = ["us-west-2a", "us-west-2b"]

...

}8. Monitor LLM Application Performance in Real-Time

Implement real-time monitoring tools, such as Prometheus or Grafana, to track the performance and health of your LLM application. Monitoring allows for the early detection of anomalies or degradations in service, enabling quick remediation. Set up alerts based on custom thresholds to notify the team of potential issues.

# Example Prometheus alert rule for high response time

groups:

- name: high_response_time

rules:

- alert: HighResponseTime

expr: job:request_duration_seconds:avg > 0.5

for: 1m

labels:

severity: page

annotations:

summary: High response time detected9. Apply AI Ethics and Fairness Guidelines

When building LLM applications, adhere to ethical guidelines and fairness principles to prevent bias and ensure equitable outcomes for all users. Regularly audit your LLM models for bias by analyzing model predictions across different demographics, and adjust training data or algorithms as necessary. Utilize fairness toolkits like AI Fairness 360 to identify and mitigate potential biases in your models.

# Example snippet for using AI Fairness 360

from aif360.datasets import BinaryLabelDataset

from aif360.metrics import BinaryLabelDatasetMetric

dataset = BinaryLabelDataset(...)

metric = BinaryLabelDatasetMetric(dataset, ...)

print(metric.mean_difference())10. Continuous Learning and Improvement

The field of LLM and AI is rapidly evolving. Stay informed about the latest research, tools, and best practices. Encourage continuous learning within your team and consider partnerships with academic institutions or industry consortia to stay at the forefront of LLM technology.

Conclusion

By integrating these best practices into the lifecycle of LLM application development, teams can navigate the complexities inherent in working with large language models. These guidelines serve not only to enhance the technical robustness of LLM applications but also to ensure they are developed in an ethically responsible and secure manner. The StreamDeploy Dockerfile Generator Tool’s architecture provides a solid foundation, and adherence to these practices will further ensure that LLM applications are built to the highest standards of excellence.

Give StreamDeploy a Try Now

We aren’t keeping these lessons learned to ourselves, we’re empowering other teams. StreamDeploy is designed to help deploy LLM applications quickly and securely. Give StreamDeploy a try today, and schedule a call to see how we can help your organization build and launch your new or existing LLM application.